- Published on

Challenges of Building LLM Agents and Plugins

- Authors

- Name

- John Hwang

- @nextworddev

Why ChatGPT Plugins and LLM Agents Feel Underwhelming

When ChatGPT Plugins Beta launched in March 2023, many predicted Plugins to become the “next iOS App Store”, and LLM agents to be solving all our mundane problems by now.

But are ChatGPT Plugins - and more broadly, LLM agents - delivering on their hype? It’s time for a sober assessment, and highlight the common challenges and realities of building LLM agents that are actually useful.

In this post, we:

- Identify the main challenges of building “agentic” products like ChatGPT Plugins or LLM Agents.

- the nature of the challenge and why it’s a challenge

- whether it’s a challenge that can be solved with a line of sight

- or, whether it’s a fundamental challenge that plagued chatbots from the previous AI hype cycle (e.g. Alexa, Siri)

- Argue that LLM agents or ChatGPT Plugins aren’t suitable for many use cases, especially for tasks where GUI adds a lot of value, which is the vast majority of “consumer” use cases.

- Propose a simple framework to tell whether a ChatGPT Plugin or an LLM agent is worth building (when should you build an agent?)

TLDR: Most consumer-facing ChatGPT Plugins or LLM agents are solutions in search of a problem, and Chat-based UX is not suitable for many use cases outside of naturally text-dominant areas like writing or coding. LLM agents may be more suitable as glue components between Natural Language and traditional backend services. Issues are typically in 4 buckets: 1) latency, 2) reliability, 3) UX, and 4) trust & security.

Creating engaging natural language apps is very difficult. This proved to be true for Siri and Alexa - where I was a Product Lead - whose Skills ecosystem never took off like we’d hoped. Will Plugins’ fate look like that of Alexa Skill Store, or iOS App Store? Let’s find out.

Challenge 1: UX issues from latency (& overly complicated Tool dispatch model)

If you have tried using any ChatGPT plugin or any LLM agent, you probably thought the experience was very slow and clunky. You are not alone.

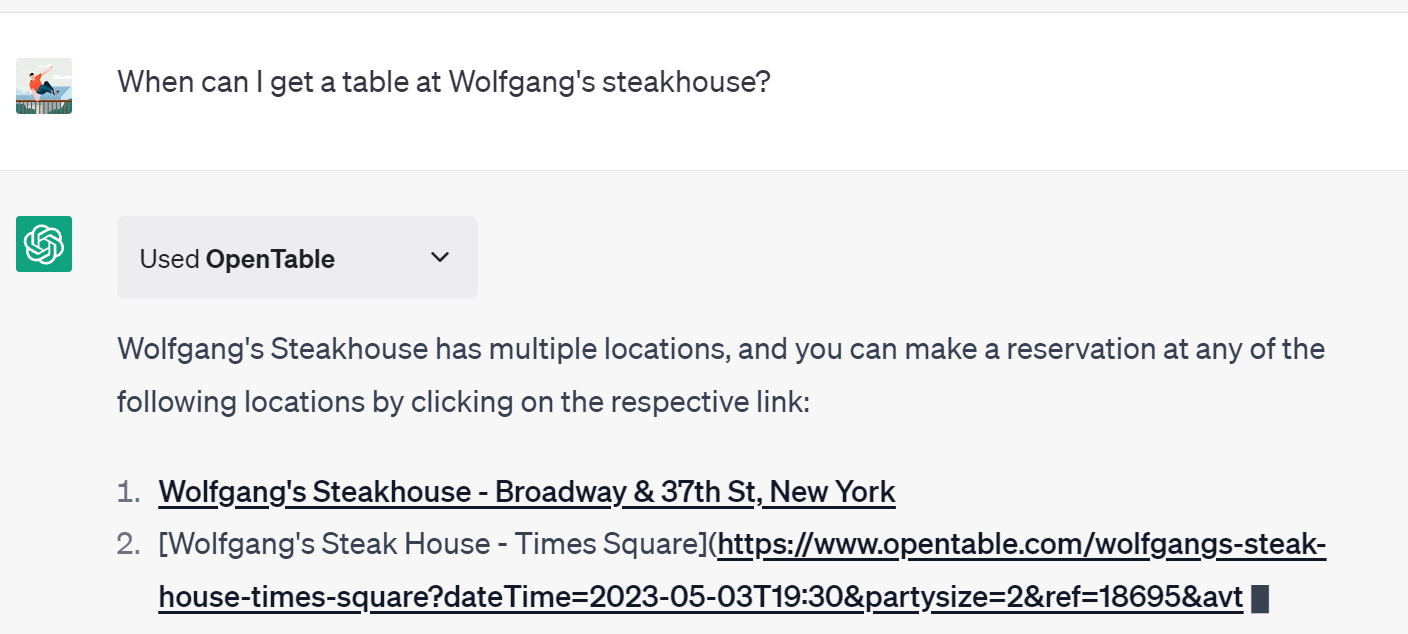

Take the example of using OpenTable ChatGPT Plugin to research nearby restaurants. Even simple queries can sometimes take 3-5 seconds. This feels insufferable compared to doing a quick Google search.

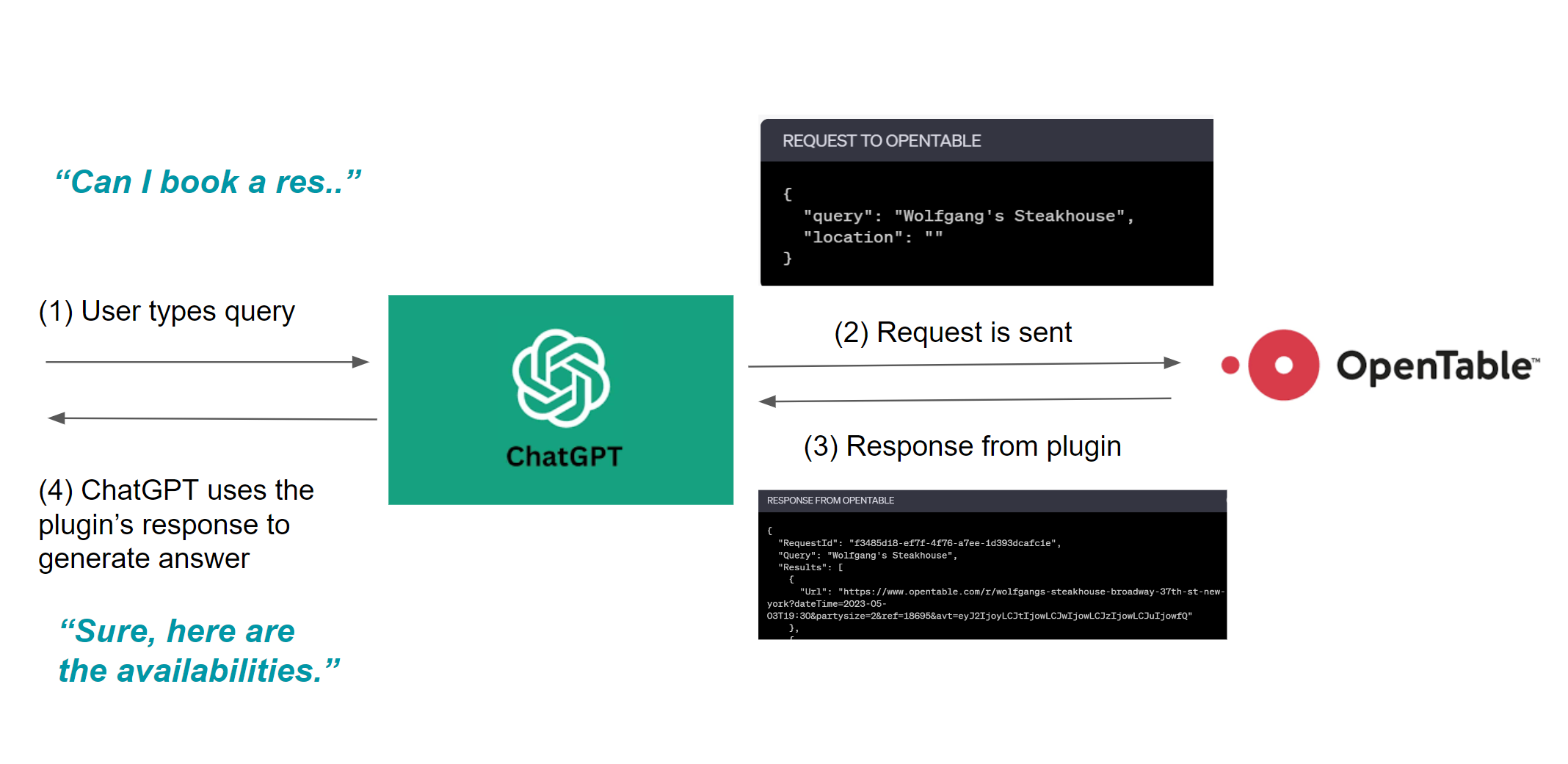

The perceived latency with LLM agents feels high, simply because getting LLMs to use tools / plugins is complicated. Here are the typical steps involved when LLMs use external tools:

- Step 1. tool discrimination: determine whether a tool is needed at all. Often accomplished by getting LLM to output a probability of requiring any tool.

- Step 2. tool retrieval & selection: identify which tool is needed

- Step 3. tool learning: figure out how to use the tool / plugin and construct the request, often by inspecting the API manifest file of the tool

- Step 4. tool dispatch: actually invoking / using the tool

- Step 5. output parsing: extract the output of the tool invocation if needed and relay to the LLM inside the context

- Step 6. test termination condition: figure out if the tool use completed successfully, and whether we (”the LLM”) has sufficient information to move forward.

- Step 7. error correction: if any errors occurred with tool use, identify the root cause, and retry tool use. Depending on the error, we may have to retrace all the way back to tool retrieval!

Under the hood, these steps are orchestrated and carried out as service calls, as the request flow diagram of OpenTable Plugin below shows.

Given this complexity, it’s easy to see how latency adds up, especially if LLM calls were utilized to solve each step.

To reduce latency, multiple steps can be consolidated into a single LLM call (e.g. do tool learning, dispatch, and output parsing in one shot). But no matter the optimization, at least 2 serial LLM calls are needed to complete a single tool-use loop. Some steps like “error correction” - or any introspection or observation - require separate LLM calls that aren’t parallelizable.

And this is problematic because LLM calls are slow, especially if many tokens are involved. The frequent service disruptions and API degradation with OpenAI, etc, only exacerbate the latency issue.

Perhaps this is why OpenAI’s priority is to make GPT-4 faster and cheaper - this certainly will alleviate Plugin or LLM agent latencies. [Update: On June 13th, 2023, OpenAI released additional speed improvements to GPT3.5 and GPT4]

But let’s not forget that 3rd party plugin / tool’s service invocations also add latency, by either 1) taking too long to complete requests, or 2) responding with errors, forcing the LLM to attempt correction.

In the long run, an extremely accurate, low latency GPT-4+ grade LLM, combined with expansion of context windows and updatable parameter memory, etc - may solve most of these problems. But in that case, will ChatGPT even need external tools?

Until then, here are some ideas for reducing latency for LLM agents and ChatGPT plugins: (a comprehensive list of best practices for reducing latency deserves a separate post):

- Consolidate LLM calls for tool interactions where it makes sense. Caveat: the more you consolidate, you may lose some reliability.

- Keep the LLM and tools close to each other (infrastructure-wise). ChatGPT should consider allowing 3rd party plugins to be deployable on OpenAI’s infrastructure. Alexa Skills were deployed on AWS Lambda, which probably saved 100-1500ms of latency.

- Keep the tools as simple and responsive as possible. Don’t cram too many features into a single tool.

Challenge 2: Reliability issues (tool hallucination, invalid requests, inconsistent outputs, etc)

In addition to latency, LLM agents and even ChatGPT plugins are notoriously unreliable, even when provided with cutting edge LLMs like GPT-4 is used as the reasoning engine.

To make matters worse, most techniques for improving reliability rely on introducing more LLM calls - which only worsens latency!

By “unreliable”, I mean issues like:

- tool hallucination: trying to use tools that don’t exist

- over-eagerness in using tools: trying to use a tool when none is needed. This is why it’s helpful to use a tool discriminator to let LLM decide separately whether it needs a tool

- request parameter hallucination: hallucinating request body parameters or JSON shape, leading to invalid HTTP requests to plugins or tools. (Update: OpenAI’s functions feature supposedly helps with request parameter hallucination issue, but even OpenAI admits that hallucinations are still possible)

- action planning / tool selection inconsistency: using a different set of tools (or the same tools in different orders) each time it’s used. This happens when multiple similar tools are available at once.

- output format inconsistency: LLMs may output or respond in inconsistently for each user depending on

To counter some of these issues, typically developers try 1) reducing the number of tools provided to LLM, and 2) making each tool simpler and dumb. For example, ChatGPT Plugins “forces” the end user to active only up to X Plugins at once. But this introduces more friction to UX, and it doesn’t really solve the problem fundamentally.

[Update 6/13/2023] The release of Function Call feature for GPT may solve this, but OpenAI warned in the documentation that there’s still a chance that GPT hallucinates request parameters, and so on.

The obvious solution would be to have a much faster (3x+) and cost-efficient GPT-4. In that case, we could add a separate LLM call just to validate request parameters BEFORE invoking external plugins.

But latency and reliability aren’t the biggest challenges you will face in building LLM agents. It’s UX.

Challenge 3: UX issues with LLM agents and ChatGPT plugins

Even if latencies and reliability improve for LLM APIs, there’s still the elephant in the room: Is Chat or LLM Agents even the right interface for the end user, versus say, a GUI?

For example, do I really want to use ChatGPT to book a table at a restaurant? I’d rather scroll through a bunch of pictures of restaurants, click on menus, etc. I’m sure most other people share my feelings.

Simply put, natural language is not a better user interface than GUIs for most use cases. There’s a reason why GUIs were invented to begin with, and users often feel constrained when limited to typing or speaking. This, imo, was the biggest reason why Siri or Alexa failed to engage users.

Instead of shoehorning a LLM Chatbot into every application, we should consider whether Chat is appropriate at all. Here’s a rule of thumb: chatbots or LLM agents only make sense for the end user when the task itself is already text or conversation heavy. There’s no surprise in writers and coders being early adopters for ChatGPT, or that the AI was well-received for customer support functions.

For all other use cases, ask whether Chat works better than a GUI. If not, consider 1) providing LLM agents or plugins as a feature inside a GUI-driven app, or 2) utilizing LLMs agents in the backend only.

Challenge 4: Tool Quality

Tool-using LLM agents are only as useful as their tools’ quality. To incentivize higher quality tools and agents to be developed, the platform needs to help and incentivize developers, specifically with:

- Tool discoverability. If you are a plugin / tool developer, how do you get discovered?

- Monetization: Alexa was never able to monetarily reward its Skill developers meaningfully. Would ChatGPT be any different?

These issues will need to be addressed eventually. Otherwise, developers will lose interest in contributing new Plugins or making tools for agents.

Challenge 5: Trust and Security

Related to quality is trust. Could you entrust a LLM agent with any non-trivial task, and with your data?

Plugin / LLM agent users face the following challenges with tools:

- Lack of Perceived Control: Users have no control over when to invoke the tool (LLM is doing it), and how the tool executes

- Trust: Is it safe to use some random 3P plugin or tool? This is especially problematic if the tool has access to your private data or can take “admin” level actions (like sending emails).

- Security: How do we verify that 3P plugins and tools don’t go rogue, or inject malicious tokens?

It’s currently unclear how watertight OpenAI’s plugin onboarding process is. And needless to say, any 3P / Langchain / LLamaindex plugin you find on the Internet should probably not fully trusted, just like any NPM or PyPi module.

Should You Build a LLM Agent or ChatGPT Plugin?

So far, we have discussed the numerous challenges of building Plugins and Agents, which should help frame whether you should build one yourself.

It seems like good use cases for Chat-driven plugins and agents are:

- Narrow business tasks that are amenable to programmatic validation such as code gen are ripe for

- Workflows that are text or conversation dominant already, such as coding, reading, or content creation of any form.

- Niche, long form data source providers via data retrieval plugins (for retrieval augmented generation)

- Code Interpreters

The common theme here is that plugins and agents are most additive to tasks that ChatGPT is already used heavily for, such as coding or writing. For other use cases like entertainment or narrow-domain tasks (like looking up nearby businesses), plugins and agents aren’t well suited.

As LLM latency and reliability improves, many of the current technical challenges will go away, and user experience should improve - but that won’t be enough to drive mainstream adoptions for LLM agents or ChatGPT Plugins. So it’s more productive for developers to build agents or plugins for use cases that make sense, instead of trying to shoehorn agents into every product.